Main author has another great paper!

Image-to-image translation with conditional adversarial networks

CVPR 2017

More cited than cycle GAN, a bit different idea

More general, not just style transfer

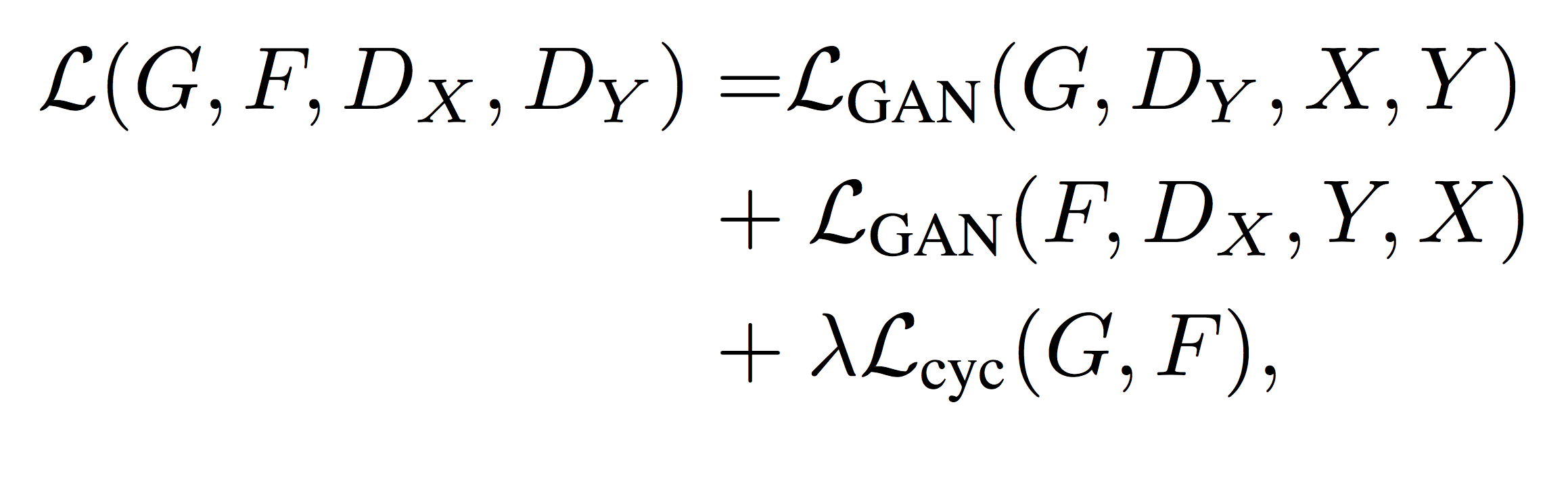

Create and estimate correspondance between two high dimensional distributions

But why is it so big an popular?

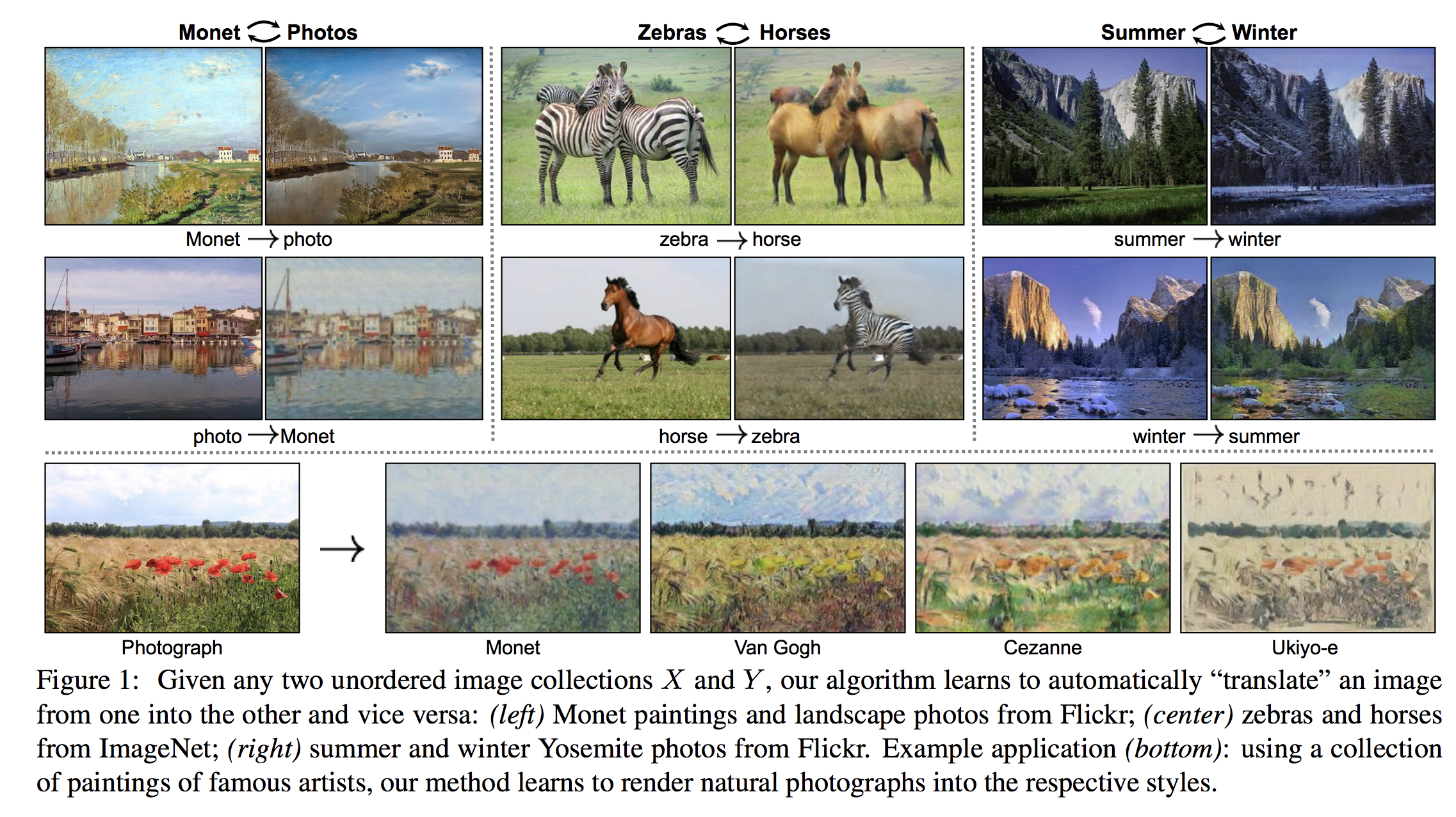

We do not need matching samples!

Opens up possibilities for image synthesis

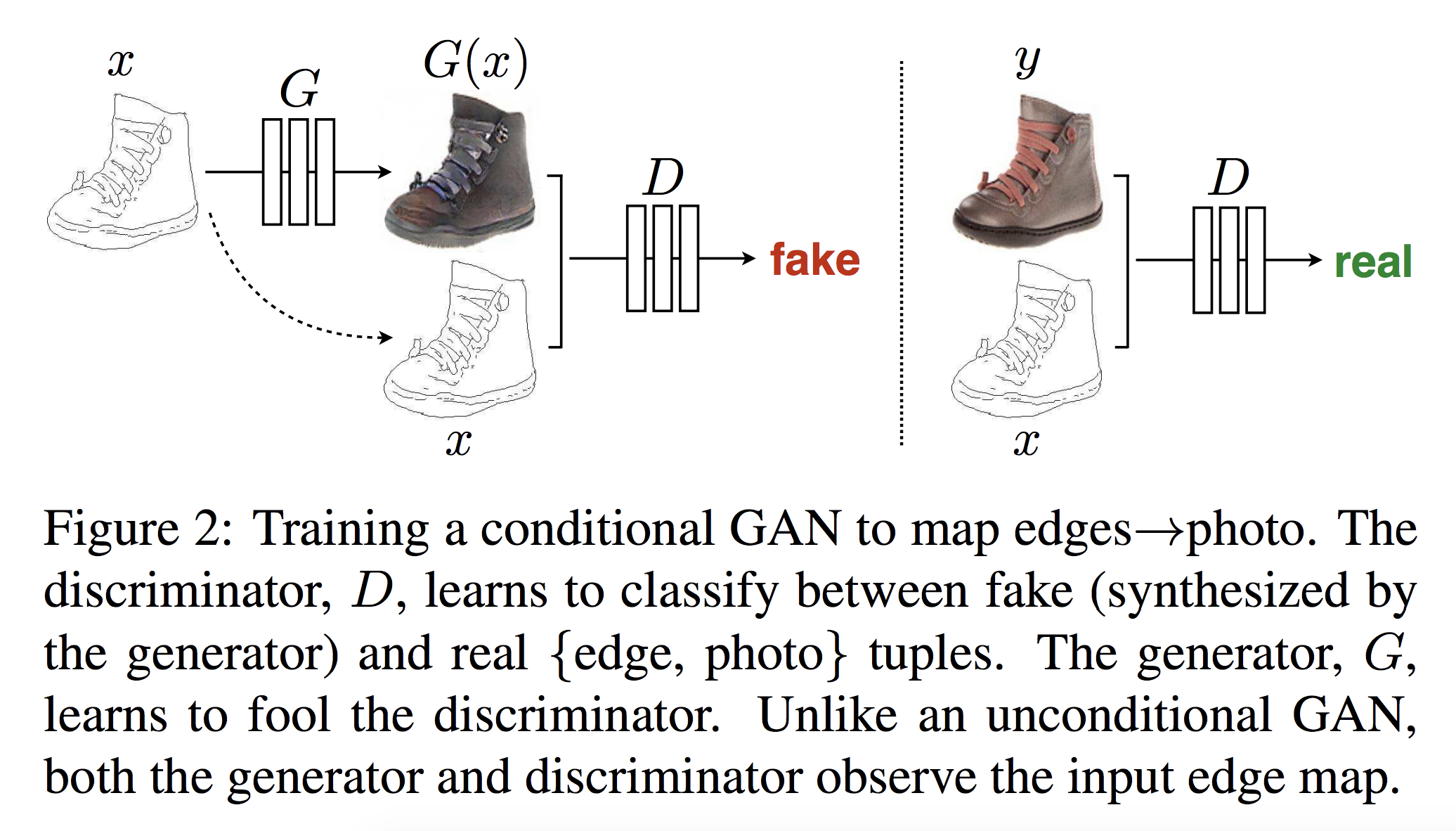

We could use a GAN to generate image in other domain and have a discriminator tell if it was generated or not.

In theory this could work

In practice it doesn’t

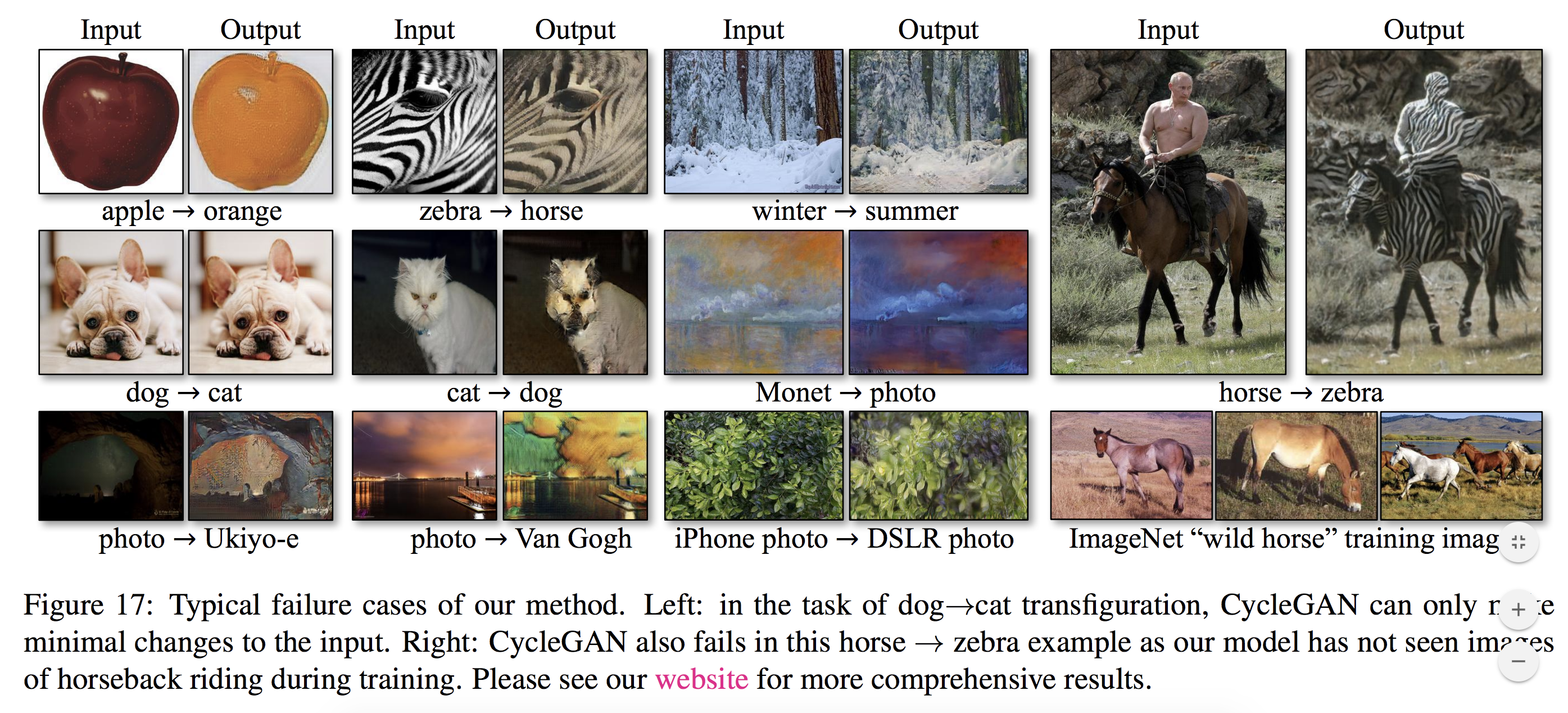

GANs alone do not guarantee that things pair up in meaningful ways, inifinitely many mappings that achieve the same thing.

Very prone to mode collapse, where everything is mapped to the same thing.

Good for neural style transfer and super resolution

Two stride-2 convolutions

Several residual blocks

Two fractionally strided convolutions, with stride \(0.5\)

6 blocks for \(128\times 128\), 9 for higher res

Use instance normalization

Use \(70 \times 70\) patchGANs

Sample overlapping patches from generated and real

Scales to larger output automatically

Has fewer parameters than a full image discriminator

Image-to-image translation with conditional adversarial networks

CVPR 2017

More cited than cycle GAN, a bit different idea